- 1. Node.js TypeScript #1. Modules, process arguments, basics of the File System

- 2. Node.js TypeScript #2. The synchronous nature of the EventEmitter

- 3. Node.js TypeScript #3. Explaining the Buffer

- 4. Node.js TypeScript #4. Paused and flowing modes of a readable stream

- 5. Node.js TypeScript #5. Writable streams, pipes, and the process streams

- 6. Node.js TypeScript #6. Sending HTTP requests, understanding multipart/form-data

- 7. Node.js TypeScript #7. Creating a server and receiving requests

- 8. Node.js TypeScript #8. Implementing HTTPS with our own OpenSSL certificate

- 9. Node.js TypeScript #9. The Event Loop in Node.js

- 10. Node.js TypeScript #10. Is Node.js single-threaded? Creating child processes

- 11. Node.js TypeScript #11. Harnessing the power of many processes using a cluster

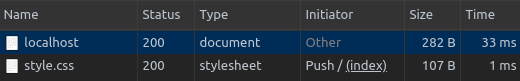

- 12. Node.js TypeScript #15. Benefits of the HTTP/2 protocol

- 13. Node.js TypeScript #12. Introduction to Worker Threads with TypeScript

- 14. Node.js TypeScript #13. Sending data between Worker Threads

- 15. Node.js TypeScript #14. Measuring processes & worker threads with Performance Hooks

The HTTP protocol, having been introduced in 1991, is almost 30 years old. It went through quite a journey since the first documented version, later called 0.9. In this article, we briefly go through the history of the development of the HTTP protocol and focus on what HTTP/2 brings and how we can benefit from that. We also implement it using a Node.js server.

A brief history of the HTTP protocol

The first version of HTTP could only transfer HyperText Markup Language (HTML) files, and therefore we call it HyperText Transfer Protocol. It was truly simple, and the only method available was GET. It lacked HTTP headers or status codes. In case of a problem, the server could respond with HTML files with a description of an error.

In the year of 1996, the 1.0 version emerged. It added many improvements over the previous version, one the most essential being status codes, additional methods like POST and headers. We now could use the Content-Type header to transmit files other than plain HTML.

The HTTP/1.1, published in 1997, introduced additional improvements. Aside from adding methods like OPTIONS, it introduced the Keep-Alive header. It allowed a single connection to remain open for multiple HTTP requests. Thanks to that, the connection didn’t have to close after each request and later reopen. We could only typically have six connections at a time in the HTTP/1.1. Each one of them could handle one request at a time – if one of them stuck, for example, due to some complex logic on the server, the whole connection froze and waited for the response. This issue is called head-of-line blocking.

Benefiting from implementing Node.js HTTP/2

There are quite a few ways of implementing HTTP/2 in your stack. A common approach might be to implement it on the webserver, but in this article, we do it in the application layer to expand our Node.js knowledge. The first thing to know is that browsers do not support unencrypted HTTP/2. That means that we need to implement a TLS connection over the HTTPS protocol. To do this locally, we generate the certificate with the following command:

|

1 |

openssl req -x509 -newkey rsa:4096 -keyout key.pem -out certificate.pem -days 365 -nodes |

If you want to know the details, check out Implementing HTTPS with our own OpenSSL certificate

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

import * as http2 from 'http2'; import * as fs from 'fs'; import * as util from 'util'; const readFile = util.promisify(fs.readFile); async function startServer() { const [key, cert] = await Promise.all([ readFile('key.pem'), readFile('certificate.pem') ]); const server = http2.createSecureServer({ key, cert }) .listen(8080, () => { console.log('Server started'); }); server.on('stream', (stream, headers) => { stream.respond({ 'content-type': 'text/html', ':status': 200 }); stream.end('<h1>Hello World</h1>'); }); server.on('error', (err) => console.error(err)); } startServer(); |

Solving the head-of-line blocking issue

In some complex application, even six parallel connections – as with the HTTP/1.1 – might not be enough, especially when we experience head-of-line blocking. HTTP/2 solves this issue by allowing one connection to handle multiple requests at once – thanks to that even if one of them is stuck, the others can continue.

In this simple example above, we respond with plain HTML. The “stream” event fires every time someone makes a request to our server.

If you want to know more about streams, check out Paused and flowing modes of a readable stream & Writable streams, pipes, and the process streams

In the headers argument, we have all the headers of the upcoming request. It’s a way for us to check things like the method of a request and the path.

Now, since we use HTTP/2, the browser uses a single connection that is non-blocking. A noteworthy example of that is a demo created by the Golang team. It consists of a grid o 180 tiled images. You can see a significant improvement in the performance when using HTTP/2 due to having parallel requests handled over the same connection and dealing with the head-of-line blocking issue.

Header compression

HTTP/2 uses a new header compression algorithm that we call HPACK. Some of the developers involved in defining the HTTP/2 protocol were also developing SPDY that was involved compressing the headers before. Unfortunately, it was found that it is vulnerable to the Compression Ratio Info-leak Made Easy (CRIME) exploit. Not only HPACK makes the whole process more secure, but it is also faster in some cases.

Caching data using Server Push

All of the above improvements work out of the box when we implement HTTP/2. It is not all that we can do. With the Server Push, we can now populate data in a client cache. We can do it even before the browser requests it. A fundamental example of that is a situation when the user requests an index.html file.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

server.on('stream', (stream, headers) => { const path = headers[":path"]; switch(path) { case '/': { stream.respond({ 'content-type': 'text/html', ':status': 200 }); stream.end(` <head> <link rel="stylesheet" type="text/css" href="style.css"> </head> <body> <h1>Hello World</h1> </body> `); break; } case '/style.css': { stream.respond({ 'content-type': 'text/css', ':status': 200 }); stream.end(` body { color: red; } `); break; } default: { stream.respond({ ':status': 404 }); stream.end(); } } }); |

In the above example, when the user visits the main page, he requests the index.html file. When he is given one, he then notices that he also needs the style.css file. There is some delay between requesting index.html and style.css and using Server Push we can deal with it. Since we know that the user is going to need the style.css file we can send it along with index.html.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

server.on('stream', (stream, headers) => { const path = headers[":path"]; switch(path) { case '/': { stream.pushStream({ ':path': '/style.css' }, (err, pushStream) => { if (err) throw err; pushStream.respond({ 'content-type': 'text/css', ':status': 200 }); pushStream.end(` body { color: red; } `); }); stream.respond({ 'content-type': 'text/html', ':status': 200 }); stream.end(` <head> <link rel="stylesheet" type="text/css" href="style.css"> </head> <body> <h1>Hello World</h1> </body> `); break; } case '/style.css': { stream.respond({ 'content-type': 'text/css', ':status': 200 }); stream.end(` body { color: red; } `); break; } default: { stream.respond({ ':status': 404 }); stream.end(); } } }); |

Now, using the stream.pushStream function we send the style.css file as soon as someone requests the index.html. When the browser handles it, it sees the <link> tag and notices that it also needs the style.css file. Thanks to the Server Push, it is already cached in the browser so it does not need to send another request.

Summary

HTTP/2 aims to improve performance by meeting the needs of web pages that get more complex. The amount of data that we send using the HTTP protocol increases and HTTP/2 approaches the issue by dealing with the head-of-line blocking, among other things. Thanks to parallel request handled over the same connection it lifts some of the restrictions of HTTP/1.1.

good job